- Welcome to Webmaster Forums - Website and SEO Help.

What is the main work of Search Engine Spiders?

Started by bathsvanities, March 14, 2017, 08:31:31 AM

User actions

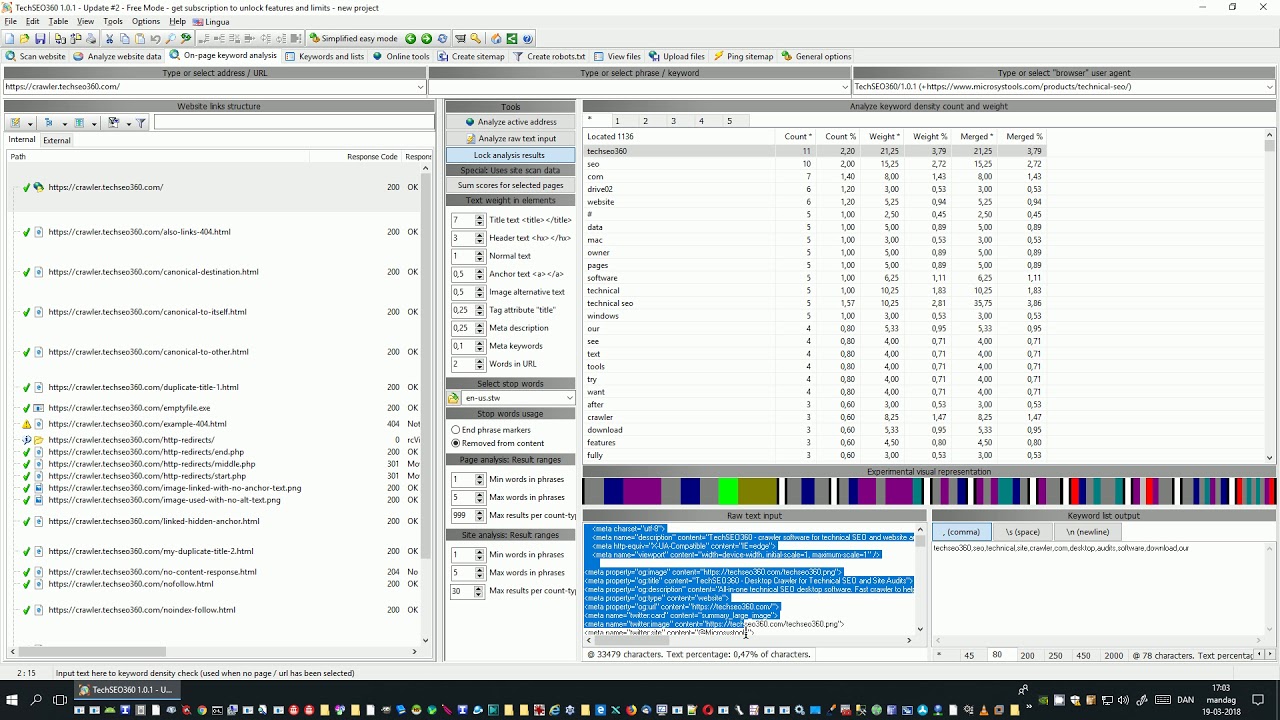

Note: Check our video related to keywords in "What is the main work of Search Engine Spiders" on YouTube.