- Welcome to Webmaster Forums - Website and SEO Help.

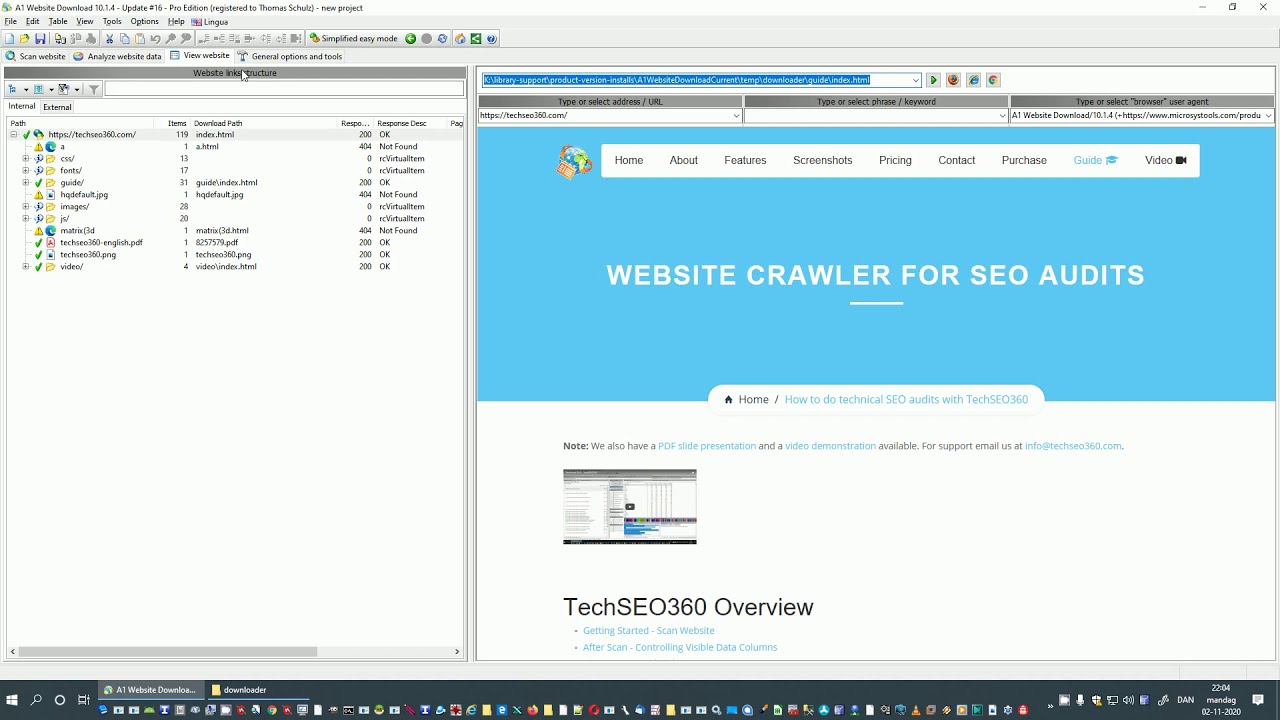

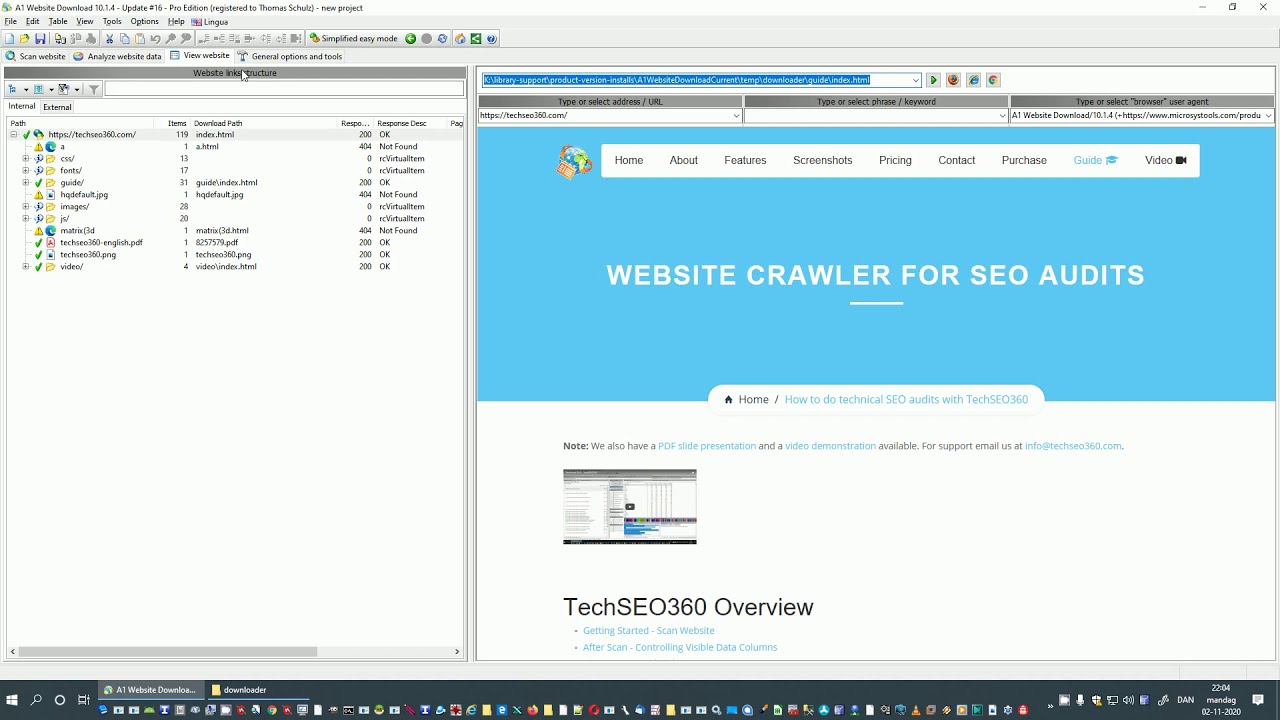

Limits and filters

Started by spiweb, February 21, 2012, 03:55:05 AM

User actions

Note: Check our video related to keywords in "Limits and filters" on YouTube.

Started by spiweb, February 21, 2012, 03:55:05 AM